Algorithm designs soft robots that sense

Deep learning technique optimizes the arrangement of sensors on a robot’s body to ensure efficient operation.

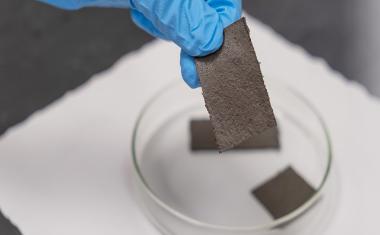

在那里are some tasks that traditional机器人s— the rigid and metallic kind — simply aren’t cut out for.Soft-bodied robots, on the other hand, may be able to interact with people more safely or slip into tight spaces with ease. But for robots to reliably complete their programmed duties, they need to know the whereabouts of all their body parts. That’s a tall task for a soft robot that can deform in a virtually infinite number of ways.

MIT researchers have developed an algorithm to help engineers design soft robots that collect more useful information about their surroundings. Thedeep learningalgorithm suggests an optimized placement of sensors within the robot’s body, allowing it to better interact with its environment and complete assigned tasks. The advance is a step toward the automation of robot design. “The system not only learns a given task, but also how to best design the robot to solve that task,” says Alexander Amini. “Sensor placement is a very difficult problem to solve. So, having this solution is extremely exciting.”

The research will be presented during April’s IEEE International Conference on Soft Robotics and will be published in the journalIEEE机器人与自动化信件. Co-lead authors are Amini and Andrew Spielberg, both PhD students in MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). Other co-authors include MIT PhD student Lillian Chin, and professors Wojciech Matusik and Daniela Rus.

Creating soft robots that complete real-world tasks has been a long-running challenge in机器人ics. Their rigid counterparts have a built-in advantage: a limited range of motion. Rigid robots’ finite array of joints and limbs usually makes for manageable calculations by the algorithms that control mapping and motion planning. Soft robots are not so tractable.

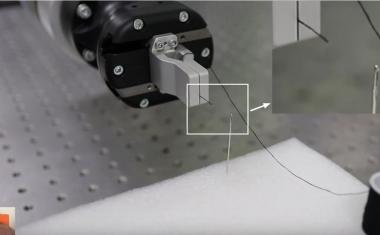

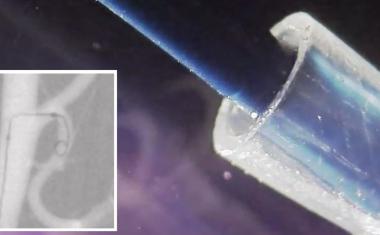

Soft-bodied robots are flexible and pliant — they generally feel more like a bouncy ball than a bowling ball. “The main problem with soft robots is that they are infinitely dimensional,” says Spielberg. “Any point on a soft-bodied robot can, in theory, deform in any way possible.” That makes it tough to design a soft robot that can map the location of its body parts. Past efforts have used an external camera to chart the robot’s position and feed that information back into the robot’s control program. But the researchers wanted to create a soft robot untethered from external aid.

“You can’t put an infinite number of sensors on the robot itself,” says Spielberg. “So, the question is: How manysensors你有,你在哪里u put those sensors in order to get the most bang for your buck?” The team turned to deep learning for an answer.

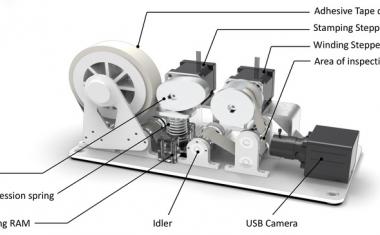

The researchers developed a novelneural network architecture that both optimizes sensor placement and learns to efficiently complete tasks. First, the researchers divided the robot’s body into regions called “particles.” Each particle’s rate of strain was provided as an input to the neural network. Through a process of trial and error, the network “learns” the most efficient sequence of movements to complete tasks, like gripping objects of different sizes. At the same time, the network keeps track of which particles are used most often, and it culls the lesser-used particles from the set of inputs for the networks’ subsequent trials.

By optimizing the most important particles, the network also suggests where sensors should be placed on the robot to ensure efficient performance. For example, in a simulated robot with a grasping hand, the algorithm might suggest that sensors be concentrated in and around the fingers, where precisely controlled interactions with the environment are vital to the robot’s ability to manipulate objects. While that may seem obvious, it turns out the algorithm vastly outperformed humans’ intuition on where to site the sensors.

The researchers pitted their algorithm against a series of expert predictions. For three different soft robot layouts, the team asked roboticists to manually select where sensors should be placed to enable the efficient completion of tasks like grasping various objects. Then they ran simulations comparing the human-sensorized robots to the algorithm-sensorized robots. And the results weren’t close. “Our model vastly outperformed humans for each task, even though I looked at some of the robot bodies and felt very confident on where the sensors should go,” says Amini. “It turns out there are a lot more subtleties in this problem than we initially expected.”

Spielberg says their work could help to automate the process of robot design. In addition to developing algorithms to control a robot’s movements, “we also need to think about how we’re going to sensorize these robots, and how that will interplay with other components of that system,” he says. And better sensor placement could have industrial applications, especially where robots are used for fine tasks like gripping. “That’s something where you need a very robust, well-optimized sense of touch,” says Spielberg. “So, there’s potential for immediate impact.”

“Automating the design of sensorized soft robots is an important step toward rapidly creating intelligent tools that help people with physical tasks,” says Rus. “The sensors are an important aspect of the process, as they enable the soft robot to “see” and understand the world and its relationship with the world.”