Robot learns fast and safe navigation strategy

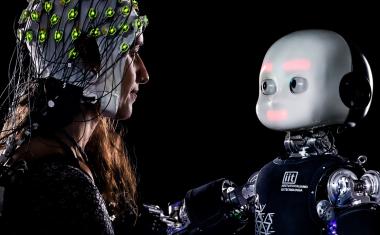

Researchers have proposed a new framework that combines deep reinforcement learning (DRL) and curriculum learning for training mobile robots to quickly navigate while maintaining low collision rates.

One of the basic requirements of autonomousmobile robotsis their navigation capability. The robot must be able to navigate from its current position to the specified target position on the map as per given coordinates, while also avoiding surrounding obstacles. In some cases, the robot is required to navigate with a speed sufficient to reach its destination as quickly as possible. However, therobotsthat navigate faster usually have a high risk of collision, making the navigation unsafe and endangering the robot and the surrounding environment.

To solve this problem, a research group from the Active Intelligent System Laboratory (AISL) in the Department of Computer Science and Engineering at Toyohashi University of Technology (TUT) proposed a new framework capable of balancing fast but safe robot navigation. The proposed framework enables the robot to learn a policy for fast but safe navigation in an indoor environment by utilizingdeep reinforcement learningand curriculum learning.

Chandra Kusuma Dewa, a doctoral student and the first author of thepaper, explained that DRL can enable the robot to learn appropriate actions based on the current state of the environment (e.g., robot position and obstacle placements) by repeatedly trying various actions. Furthermore, the paper explains that the execution of the current action stops immdediately the robot achieves the goal position or collides with obstacles because the learning algorithms assume that the actions have been successfully executed by the robot, and that consequence needs to be used for improving the policy. The proposed framework can help maintain the consistency of the learning environment so that the robot can learn a better navigation policy.

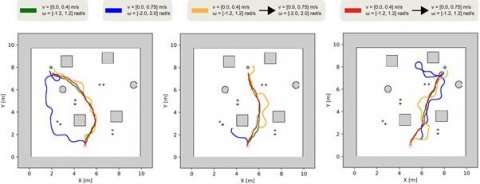

In addition, Professor Jun Miura, the head of AISL at TUT, described that the framework follows a curriculum learning strategy by setting a small value of velocity for the robot at the beginning of the training episode. As the number of episodes increases, the robot's velocity is increased gradually so that the robot can gradually learn the complex task of fast but safe navigation in the training environment from the easiest level, such as the one with the slow movement, to the most difficult level, such as the one with the fast movement.

Recommended article

7 mobile disinfection robots for medical environments

The following seven robotic systems are either currently being deployed or developed for the fight against the coronavirus.

Because collisions in the training phase are undesirable, the research of learning algorithms is usually conducted in a simulated environment. We simulated the indoor environment as shown below for the experiments. The proposed framework is proven to enable the robot to navigate faster with the highest success rate compared to other previously existing frameworks both in the training and in the validation process. The research group believes that the framework is valuable based on the evaluation results, and it can be widely used to train mobile robots in any field that requires fast but safe navigation.